Submit: Turn in your

entire xinu-hw7 directory

source files using the

turnin command on morbius.mscsnet.mu.edu or

one of the other

Systems Lab machines. Please run "make clean" in the

compile/ subdirectory just before submission to reduce

unnecessary space consumption.

Work should be completed in pairs. Be certain to include both names in the comment block at the top of all source code files, with a TA-BOT:MAILTO comment line including any addresses that should automatically receive results. It would be courteous to confirm with your partner when submitting the assignment.

It is time for multicore. Thus far in the term, we've been using only the first core, ("Core 0"), on the Raspberry Pi 3 Model B+ platform, which has four cores within its ARM Cortex-A53 processor. In prior years, this course used single-core platforms (i.e., PowerPC machines, Linksys MIPS routers, Raspberry Pi 1 boards) to build our O/S. Since 2019, Marquette has used real multicore hardware (as opposed to virtual machines) to lead hands-on laboratory assignments in Operating Systems. As best we can tell, we are one of the first universities in the world (possibly the first!) to do this successfully as part of a regular undergraduate course. (You're welcome!)

Now that your embedded O/S will be taking advantage of this multicore architecture, you need to know the multicore hardware operations built in for your use.

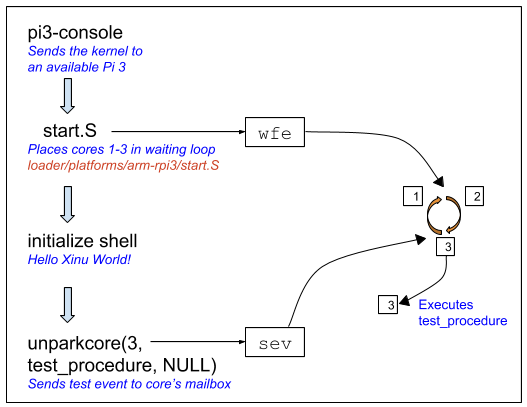

Although the concept of unparking is general to multicore

platforms, the specific implementations vary from one platform

to another. When the Pi 3 B+ boots, only core 0 is setup to

run, and cores 1-3 are put in a sleep state. (The startup

sequence in start.S executes the WFE

opcode, "Wait For Event", on each of the other cores.) To

wake up, or unpark, a core, core 0 sends an event (ARM

opcode SEV, "Send EVent",) to wake another core.

Upon waking the new core will check the value in a special

predetermined location known as its

mailbox. The core then begins execution at the address

that it finds in its mailbox.

In this example, core 3 is unparked and sent to execute a given

test_procedure() with no arguments (NULL). In practice,

your O/S will unpark each core with its own dedicated NULL Process.

Note: unparkcore() is already

implemented for you and can be found

in system/unparkcore.c

unparkcore(int core, void* address, void* argument);

In the implementation of unparkcore(), you will

see that it will unpark the core to an assembly routine called

setupCore() (found

in system/setupCore.S). setupCore() is

necessary because the core needs to undergo specific

initializations before any of your code can run.

Note: Source files unparkcore.c

and setupCore.S are already implemented for you.

First, make a copy of your Project 6 directory:

cp -R xinu-hw6 xinu-hw7

Then, untar the new project files on top of it:

tar xvzf ~brylow/os/Projects/xinu-hw7.tgz

New files:

New versions of existing files:

The new files provide the utilities and code for initializing the new cores, building a NULLPROC for each core, and adapting the clock system for multiple cores and preemption.

But default, the first for PIDs in the system will be reserved, for NULLPROC0, NULLPROC1, NULLPROC2 and NULLPROC3. As in our single core implementation, the NULLPROC is not expected to do anything meaningful once core initialization is complete. However, the NULLPROC for a given core is always available to run, so when a core has no other work, it may twiddle its thumbs running its NULLPROC. Changes in start.S and initialize.c allocate dedicated stack space for each NULLPROC, setup the initial process control blocks, and unpark each core. A copy of the main() process is launched for each core, but only the main() process on Core 0 will call your testcases() function.

New clock code initializes the timer hardware on each of the four cores, setting up each to fire timer interrupts at 1 millisecond intervals. When the kernel.h PREEMPT constant is TRUE, clock interrupts will still force a call to resched(), preempting whatever process is currently running on that core. However, only Core 0 timer interrupts will update global clocktimer variables. The single preemption counter is replaced with an array of preemption counters, one per core.

With multiple cores in the system, mutual exclusion and locking of critical sections become of paramount importance. In atomic_utils.S, we provide ARM assembly implementation of a "Compare and Swap" ("CAS") primitive that works correctly across mulitple cores using the LDREX and STREX opcodes. In atomic.c, we provide functions for safely, atomically incrementing and decrementing global variables. In lock.c, we provide a simple multicore spinlock implementation. You are expected to study these and understand how they work.

All of your work in this assignment involves modifying existing files in your O/S, so there are no new TODOs in the files. Instead, they are here.

[Revised 2020 Oct 22 12:13 DWB]