Mining Privacy Settings to Find Optimal Privacy-Utility Tradeoffs for Social Network Services

Data Intensive Analysis and Computing (DIAC) Lab The Ohio Center of Excellence on Knowledge Enabled Computing (Kno.e.sis Center) Wright State University

Introduction

Privacy has been a big concern for users of social network services (SNS). On recent criticism about privacy protection, most SNS now provide fine privacy controls, allowing users to set visibility levels for almost every profile item. However, this also creates a number of difficulties for users. First, SNS providers often set most items by default to the highest visibility to improve the utility of social network, which may conflict with users' intention. It is often formidable for a user to fine-tune tens of privacy settings towards the user desired settings. Second, tuning privacy settings involves an intricate tradeoff between privacy and utility. When you turn off the visibility of one item to protect your privacy, the social utility of that item is turned off as well. It is challenging for users to make a tradeoff between privacy and utility for each privacy setting. We propose a framework for users to conveniently tune the privacy settings towards the user desired privacy level and social utilities. It mines the privacy settings of a large number of users in a SNS, e.g., Facebook, to generate latent trait models for the level of privacy concern and the level of utility preference. A tradeoff algorithm is developed for helping users find the optimal privacy settings for a specified level of privacy concern and a personalized utility preference. We crawl a large number of Facebook accounts and derive the privacy settings with a novel method. These privacy setting data are used to validate and showcase the proposed approach.

Research Goal

- Understand the SNS privacy problem

- The level of "privacy sensitivity" for each personal item

- Quantification of privacy

- The balance between privacy and SNS utility

- Enhancement of SNS privacy

- How to help users express their privacy concerns?

- How to help users automate the privacy configuration with utility preference in mind?

Item Response Theory(IRT) Model

IRT is a classic model used in standard test evaluation. For example, estimate the ability level of an examinee based on his/her answers to the a number of questions. This model can be used to understand the properties of the questions, and thus determine whether these questions can be used to properly evaluate subjects' certain trait. Latent trait theory can also be applied to understand subjects' strength of an attitude. For example, in our study, we try to model the SNS user's level of privacy concern or implicit utility preference as the latent trait, using the users' privacy settings (equivalent to the questionnaire).

|

(1) |

Modeling Users' Privacy and Utility

We use the two-parametric IRT model to model users' privacy concerns and the model parameters will be interpreted as:

- α - profile-item weight for a user's overall privacy concern

- β - Sensitivity level of the profile item

- θ - Level of a user's privacy concern

And similarly, we use the same IRT modeling method to derive the simple model for utility preference.

|

(1) |

Weighted Personalized Privacy Setting

Users often have clear intention for using SN but have less knowledge on privacy Users want to put higher utility weight on (a) certain group(s) of profile items than others Users can assign specific weights to profile items to express his/her preference The utility IRT model can be revised with a weighted model. We develop an algorithm to incorporate these weights in learning the personalized utility model. We want to overweight those samples consistent with the target user’s preference. The key is to appropriately revise the likelihood function to incorporate the weights for learning.

Finding Optimal Tradeoff between Privacy and Utility

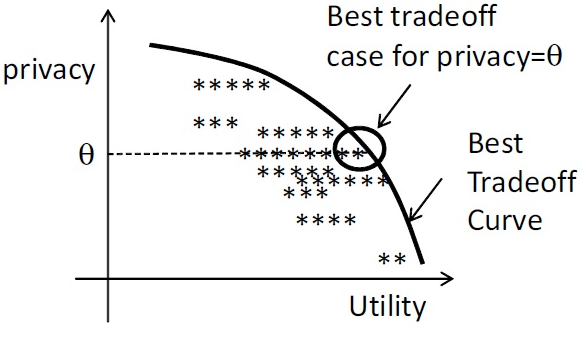

For each user in the training data, we can derive a pair of privacy concern and utility preference (θ, φ), which corresponds to a pair of privacy rating and utility rating (p, u). These pairs represent the tradeoffs between the privacy and utility. We want to find the ones that satisfy the specific level privacy concern and, meanwhile, maximizing the utility.

Experimental Results

|

|

| Figure 3: β of the item model can be explained as how sensitive the item is perceived by users. The lower the β is, the more users want to hide the item. | Figure 4: Histogram of the number of changed profile items. |

|

|

| Figure 5: The percentage of users sets the profile item to "disclosed". | Figure 6: The sketch of the item models for privacy modeling. |

|

|

| Figure 7: The number of users at different levels of privacy concern: θ. | Figure 8: Comparing the privacy rating and the weighted number of hidden items. The optimal privacy configuration can be found from the blue 'x' points that are close to the red 'o' points. |

|

|

| Figure 9: Top: the the number of users at different levels of utility preference for unweighted utility model. Bottom: for weighted utility model (overweighting "phone", "email", and "employers" by a factor 1.1). | Figure 10: The tradeoff between the privacy and utility for unweighted utility model. |

|

|

| Figure 11: Each point in the tradeoff graph represents one user. The points around the upper bound contain the optimal tradeoffs. |

Datasets

Binary profile visibility datasets. Download hereMore dataset will be available upon request.

Tools

All the experiments are done with IRT package with R.Download the full version of this paper.